Building an online image compressor

Recently, I stumbled upon this Reddit post where OP says he made $1000 from his image compressor. He built it in 2 hours. After quickly analyzing the website, I figured it couldn't be too hard to make my own.

Objectives were to build a fast image compressor with:

- no upload nor sign up

- no images limit

- no file size limit

- privacy-friendly in mind

After one week of work, www.bulkcompress.photos was born.

This post summarizes how I built it.

JPEG encoding

One of the first thing I did is research about JPEG, and it’s efficient lossy compression algorithm. JPEG is the most popular image format for photography, it is widely known and supported so there is a tons of resources about it.

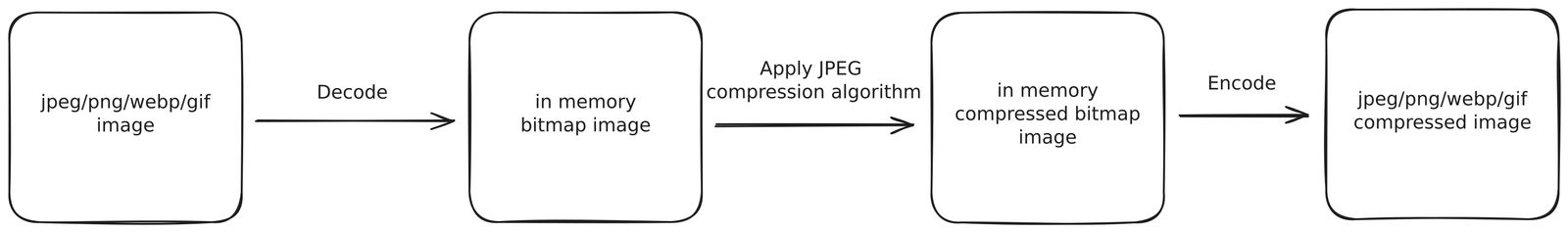

My original plan was to apply JPEG compression to other image format as follow:

I’ve already had some knowledge about since I’ve studied it a few years back. It was time for a refresh. This article and the JPEG Wikipedia page are great to understand the overall functioning of the algorithm.

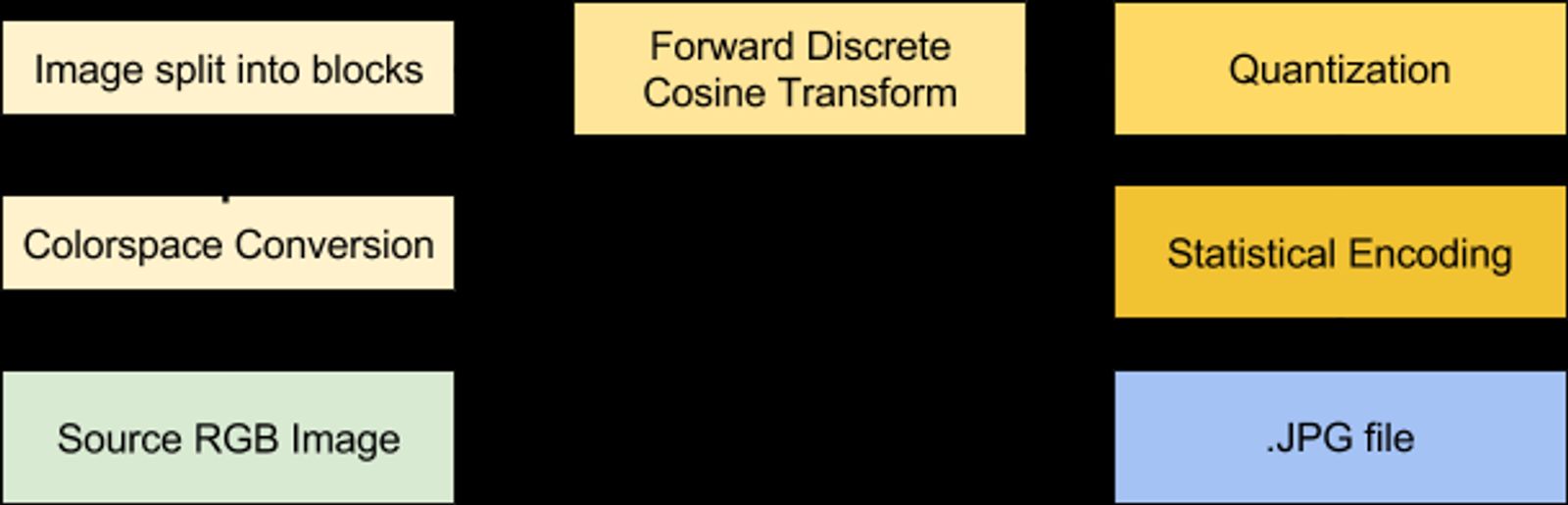

Interesting part of JPEG encoding is the quantization step. This is where data loss occurs. Many videos and images encoding systems favors quantization of chrominance (middle image) over luminance (left image) as human eye is more sensible to the latter:

You can play with chrominance and luminance on this playground to get better insight.

Implementation

Images processing require manipulating bytes and performing some complex computation. JavaScript isn’t a good fit for this purpose but it’s 2024 and web assembly is a thing.

One of the most complete image compressor out there, squoosh.app by Google, uses web assembly for decoding/encoding images and it works pretty well.

My first implementation was based on photon_rs, a Rust based web assembly library for image processing. It worked pretty well, but it was slower than OP website. Without digging much it seems logical since photon isn’t optimized for performance.

After some research, I found libvips, a demand-driven, horizontally threaded image processing library. It is designed to run quickly while using as little as memory as possible.

After some manual tests, to my surprise, it was still slower than OP website. Digging into their source code shows out that their aren’t even using web assembly.

JavaScript is faster than web assembly?

Nope, but JavaScript call to standard web APIs implemented in C++ by web browsers and improved over decades of existence is.

It turns out that to compress an image, the following code is all you need:

async compress(imageFile, { quality }) {

let bitmap = await createImageBitmap(imageFile);

const offscreenCanvas = new OffscreenCanvas(bitmap.width, bitmap.height);

const ctx = offscreenCanvas.getContext("2d");

ctx.drawImage(bitmap, 0, 0, drawWidth, drawHeight);

// Encode canvas bitmap as an image of type imageFile.type.

// https://developer.mozilla.org/en-US/docs/Web/API/OffscreenCanvas/convertToBlob

const blob = await offscreenCanvas.convertToBlob({

type: imageFile.type,

quality, // only used if imageFile.type is "image/jpeg" or "image/webp"

});

return blob.arrayBuffer();

}

www.bulkcompress.photos is just an optimized GUI of the above function. I’ve also added support for resizing and converting images into another format. Finally, to improve performance, I used the worker pool I’ve developed for my load testing tool: https://github.com/denoload/denoload.

If you’re interested in Chromium’s implementation of

OffscreenCanvas.convertToBlob

here is a link to the code that perform the actual encoding:

ImageDataBuffer::EncodeImageInternal.

Conclusion

Originally, I wanted to create a paid version of my image compressor, but it didn't feel right. Despite spending a significant amount of time on it, charging users to use their own computing power and an algorithm implemented by someone else seemed wrong. In the end, I decided that the service is and will remain free and ad-free.

Also, the source code is available here.